SENSE THE UNSEEN

Overview.

Individual Project (Thesis)

Sept 2024- Dec 2024

Role

UI/UX Design · Interaction Design · Experience Strategy · Multisensory Prototyping · Physical–Virtual Integration

Tools

Blender · Autodesk Fusion 360 · Arduino · Keyshot

This project proposes a VR HMD experience and a set of physical interfaces that represent users’ movements outside the Field of View(FoV) through multimodal feedback, including dust-like particle motion, pebble sounds, vibration, and scent diffusion.

Through iterative prototyping, the final design, Sense the Unseen, was developed. In this experience, when two users interact in a shared social VR environment from invisible positions, each user perceives the other’s movement as dust particles rendered in the VR headset. Simultaneously, the Sound Stool delivers corresponding pebble sounds and vibrations, while the Scented Stool emits scent and vibration to convey spatial proximity and activity.

By integrating these multisensory expressions, the project introduces a new mode of interaction that enhances social presence within virtual environments.

Design Process.

Define

Low awareness of people outside the FoV in social VR

While attending a class in Horizon Workrooms, I turned my head and suddenly found another user much closer than expected. The unexpected proximity broke immersion and revealed a broader issue: in social VR, people outside your FoV(Field of View) are hard to perceive, which disrupts personal-space awareness and smooth communication.

Limits of multisensory experience from vision–audio bias

In VR, the presence of others is conveyed almost exclusively through sight and sound. With few non-visual/non-auditory cues, such as touch, vibration, airflow or warmth, it’s hard to read subtle social signals such as distance, approach direction, and speed. As a result, the quality of communication and co-presence falls short of face-to-face interaction.

Goal

Enhance social presence in social VR

by adding multisensory cues to motion outside the FoV without breaking immersion

Research

Before building, a literature review on social VR, social presence, field of view (FoV), prior study of out-of-FoV visualization in 2D and 3D environments, and multisensory experience design in VR environments was conducted to map the landscape and identify design levers.

Social VR Background

Explores the evolution of VR from individual immersion to shared virtual spaces, enabling real-time social interaction and co-presence.

Social Presence

Examines how users experience “being with others” in virtual environments through mutual awareness, emotional connection, and shared space.

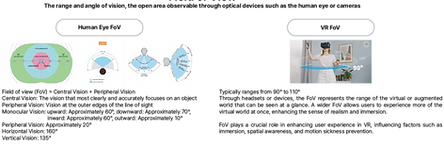

Field of View (FoV)

Highlights the role of FoV as a core factor for immersion and spatial awareness, where limited vision reduces social perception in VR.

Out-of-FoV Visualization in 2D and 3D Environmnets

Reviews visualization methods like radars, arrows, and audio cues that represent off-screen information but lack social and emotional depth.

2d

Traditional 2D environments have conveyed information about objects or surroundings outside the user's FoV using conventional interfaces such as radars, minimaps, arrows, and audio cues. However, these methods often only provide a simplified indication of the user’s location, with limitations in conveying more contextual information, such as movement direction or behavioral intent. Moreover, since most of this information is presented visually within the screen, it poses constraints in enhancing the user's sense of immersion.

3d

Multisensory Experience Design in VR Environments

Investigates how combining visual, auditory, haptic, and olfactory feedback enhances social presence and emotional engagement beyond visual immersion.

Insights

Integrated UX Strategy for intuitive and multisensory perception of social presence

Based on prior research, this study identified key design directions for enhancing social presence in Social VR environments. This study highlights the need for multisensory interfaces in Social VR that allow users to intuitively perceive others beyond their field of view. By linking sensory cues such as distance, movement, and emotion, the system enables a more intuitive sense of social presence. Ultimately, the goal is to move beyond visual–auditory interaction toward an integrated, embodied UX that expands emotional connection in virtual environments.

In physical environments, the presence of others outside one’s field of view is perceived through various sensory stimuli such as sound, vibration, and airflow generated by their body movements. These stimuli function as social cues and can be systematically categorized into sensory experiences of sight, touch, hearing, and smell. This study explores how such real-world sensory signals can be applied to virtual environments to translate and visualize the movement and presence of out-of-view users through multisensory feedback.

Prototype Ⅰ

As the first step, I explored how airflow, which is normally felt through touch, could be visualized inside VR. Air moves dynamically in response to people’s motion, letting us sense others’ presence even when they’re outside our view. I translated this tactile cue into visual dust-like particles that react to movement. By combining these subtle visuals with auditory and sensory elements such as wind sounds or particle motion, the experience extends beyond sight, creating a richer, more intuitive sense of social presence.

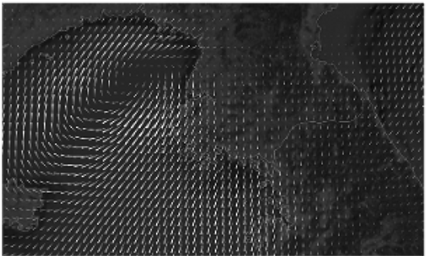

Concept

To visualize changes in airflow, I drew inspiration from the visual language of weather maps. In meteorological charts, evenly spaced grid points display arrows that indicate the direction and strength of wind at each location. Each arrow visualizes both wind direction and speed, often expressed through the arrow’s length or color intensity. Adapting this vector-field approach, I translated the invisible flow of air into a dynamic, directional visual system within the virtual environment.

Tiny points, which are almost invisible, are evenly distributed throughout the virtual environment to form a spatial grid. When users enter the space and stand outside each other’s field of view, their movements generate dynamic cylindrical forms emerging from these points. The length and direction of each cylinder reflects the unseen user’s position and motion.

Movement of

Hand, Head, Torso

Movement of

Position

Classification of the User Movement

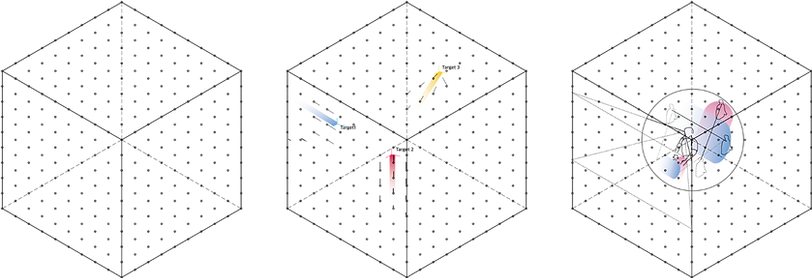

User movement was categorized based on whether positional displacement occurred, with the head, arms, and legs set as target points. Each cylinder was programmed to respond to these targets. The closer a point was to a target, the longer the cylinder extended, aligning its orientation toward the user’s position relative to that point. The particles reflecting user movement were designed to behave differently depending on the type of motion detected.

Upper Body Movements:

Location of Particles Appearing on the Screen

For the head, hands, and torso movements that convey social signals during seated conversations, particle responses were concentrated near the left and right edges of the user’s field of view. This enhances the user’s ability to sense subtle gestures and micro-movements of nearby individuals, reinforcing an embodied awareness of others.

Positional Movement:

Location of Particles Appearing on the Screen

Positional movements involve larger spatial shifts within the environment. To represent this, particle flows were generated across a wider area of the scene, visually expanding the user’s perception of motion throughout the space. This approach allows users to intuitively detect movement and presence beyond their visual range, deepening the sense of spatial connection and awareness within the virtual environment

VR Space Design

To create a new sensory experience that enables users to perceive others beyond their field of view, the virtual environment was designed as the foundation. The entire space was composed of cylindrical structures made of fine, thread-like particles, visually harmonizing with the cylindrical particle system that appears during interaction. These thread-like forms served as anticipatory cues, allowing users to intuitively expect where the next particle movements would occur and fostering a sense of visual flow and spatial anticipation within the environment.

Positional Movement

When a user changes their position outside the FoV, larger cylinders are generated from the points along the direction of movement, from left to right or right to left. These cylinders visually represent the airflow changes caused by positional movement, enabling users to sensorially perceive dynamic air currents in the surrounding space.

Upper Body Movements

When a user moves their upper body outside the Field of View (FoV), arrows are generated from the points located in the direction of the movement (left or right). Similar to meteorological wind maps, these arrows visualize both the direction and intensity of the movement, allowing users to intuitively perceive the presence and motion of others beyond their visible range.

Prototype Ⅱ

As the second prototype, I explored how the sound of wind could represent others’ movements outside the user’s view. By translating airflow changes into subtle wind sounds, the system allows users to sense the presence and motion of others through hearing, extending awareness beyond the visible field.

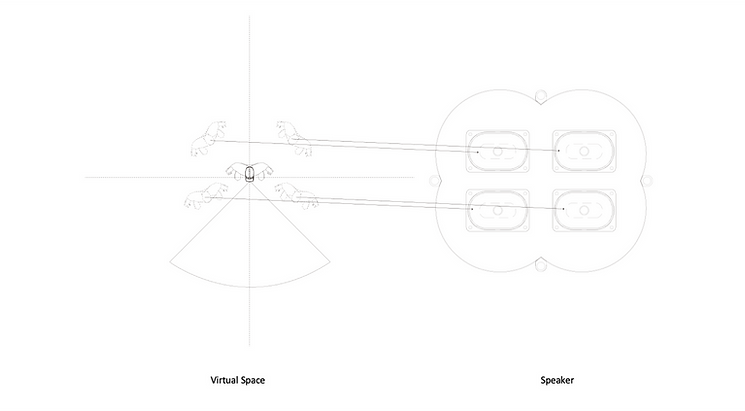

A speaker system was designed to spatially deliver wind sounds generated by airflow changes. Based on the same principle as Prototype Ⅰ, subtle wind sounds are produced when the user moves their head or controller, while stronger wind sounds are generated when the user’s position shifts within the space.

The sound output is divided across six spatial planes, providing appropriate surround sound based on the user’s position. This allows the system to detect and respond to the user’s location in real time.

Wind Sound Generated by

Upper Body Movement

Wind Sound Generated by

Positional Movement

The prototype transforms invisible airflow into sound, allowing users to hear presence beyond their sight, transforming virtual space into a shared sensory field.

Prototype Ⅲ

The third prototype allows users to physically experience vibrations generated by another person’s movement outside their field of view through an external device.

Based on an analysis of user behavior in VR environments, it was observed that people often remain seated in a fixed position while using a headset. Accordingly, a chair was designed to translate floor vibrations into a tactile and auditory experience, enabling users to hear and feel the movement of others through synchronized sound and haptic feedback in the physical space.

By using a speaker–amplifier system to generate vibrations, the setup translates sound frequencies into tactile feedback, causing the particles placed on the speaker to visibly react to the vibrations. These vibrations, transmitted through the chair, allow users to sense and interpret the movements of others who are not visible within their field of view, transforming invisible motion into a perceivable multisensory experience.

In this prototype, the user’s virtual position is mapped to the physical placement of speakers and amplifiers in the real space. The surrounding area is divided into four zones, front, back, left, and right. The movements detected in each direction trigger corresponding vibrations through the aligned speakers. The intensity of the vibration dynamically adjusts based on the distance between the user and the person located outside their field of view: the closer the other user is, the stronger the vibration becomes. This setup allows users to intuitively sense the presence and direction of others, even beyond their visual field, through spatialized tactile feedback.

Inside the stool, a built-in system is installed that allows the speaker and gravel mechanism to operate.

The prototype turns invisible movements into tangible sensations, where vibration and sound let presence be felt, even without sight.

Insights

Through the prototypes, I explored how airflow and vibration could visualize and sonify the presence of others beyond the user’s field of view in VR. The study revealed that while invisible movements can be perceived through multisensory cues, clearer synchronization between virtual and physical sensations is essential. Building on this insight, the next phase focuses on creating an immersive system where the flow of wind and vibration can be both seen and felt, deepening the sense of social presence.

SENSE THE UNSEEN

CONCEPT

The final design, Sense the Unseen, is a multisensory interface system that integrates the VR environment, Scented Stool, and Sound Stool to enable users to perceive the presence of others located outside their field of view.

In the virtual space, users’ positions and upper-body movements, such as head, hand, and torso gestures, are shared in real time through particle-based visualizations within the social VR system.

These positional data are then transmitted to the corresponding physical interfaces in the real world. The Scented Stool conveys movement through scent diffusion and vibration, while the Sound Stool translates it into synchronized sound and tactile feedback, allowing users to intuitively sense each other’s presence beyond visual boundaries.

USER FLOW

This flow illustrates how Sense the Unseen connects movements in the virtual environment with multisensory feedback in the physical world. When an out-of-view user moves, their actions are visualized as dust-like particles in VR, while the Scented Stool and Sound Stool respond with corresponding scent, sound, and vibration. The closer the other user is, the stronger the sensory feedback becomes, allowing users to intuitively sense presence beyond their field of view.

Social VR Space

"Visualize"

Body Movement & Position of Users Outside FoV

Two users each select an avatar and enter the virtual space.

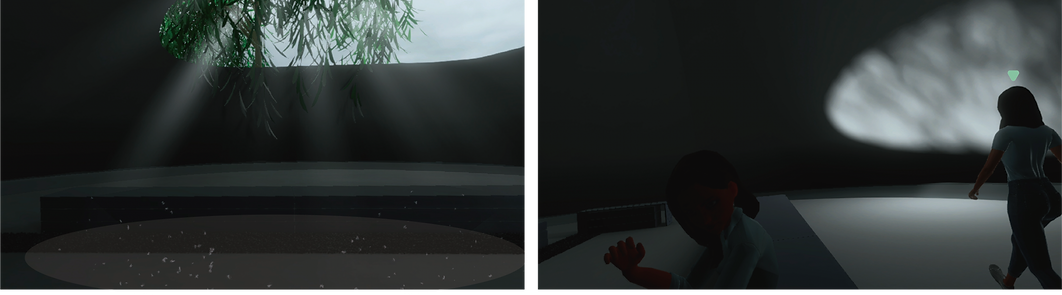

The Social VR environment was designed to visualize the airflow generated by users’ movements outside each other’s field of view, allowing them to experience a sense of social presence. The virtual space reflects the materials and tactile qualities of the physical devices used, incorporating natural elements such as stone and water to enhance sensory continuity and realism. Additionally, airflow was visualized through dust-like particles, adding environmental detail that evokes the texture of the real world.

Upon entering the virtual space, User 1 and User 2 are seated facing forward in fixed positions, unable to see each other. On both sides, two virtual users are seated in the same fixed positions, with their head, arm, and torso movements reflected in real time. Behind them, a third virtual user continuously moves along a predefined path.

Dust-like Particle Reflecting Head, Hand and Torso Movement

For User 1, the movements of the virtual users seated on either side, their head, hands, and torso, are perceived through dust-like particles displayed within the VR HMD. These particles appear as subtle motions in the lower left and right areas of the screen, allowing the user to sense movement beyond direct sight. Similarly, User 2 can perceive the movements of User 1 and the adjacent virtual users through the same particle-based visual cues.

Dust-like Particle Reflecting Positional Movement

In addition, both User 1 and User 2 can perceive the movement of Virtual User 3, who exists outside their field of view and is continuously changing position. This movement is represented by dust-like particles flowing across the entire screen, moving in the same direction as Virtual User 3’s trajectory.

VR Interface

"Audify

Scentify

Tactilization"

User’s Positional Movement Outside the FoV

Sound Stool

Scented Stool

Final Ideation, Sketch & Prototype

Experiments, sketches, and renderings were conducted to refine the Sound Stool and Scented Stool designs, testing speaker frequencies, vibration responses, material finishes, and scent diffusion methods, to determine the most effective form and sensory expression before final prototyping

Stainless steel

400(w) x 450(h) x 400(d) mm

3D Printing, Transparent acrylic

796(w) x 400(h) x 418(d) mm

Based on these explorations, the final designs of the Scented Stool and Sound Stool were completed.

When the user enters the application seated in the sound stool or scented stool, the distance between each user and Virtual User 3 is calculated in real time, transmitted to an Arduino, and converted into physical feedback.

The distance between User 1, User 2, and Virtual User 3 within the social VR space is calculated on a scale from 0 (closest) to 255 (farthest).

The distance values are categorized into five levels, each mapped to specific speaker frequencies embedded within the Sound Stool and Scented Stool. The closer the virtual user is, the lower the frequency; the farther the distance, the higher the frequency.

Pebbles

Pebbles

Speaker

Scent

Water

Speaker

Both stools share a similar structure, but each delivers distinct sensory feedback.

In the Sound Stool, the speaker vibrations cause pebbles placed on top of it to bounce and strike the metal plate of the chair, generating both tactile vibrations and sound. The intensity of this vibration and sound correlates with proximity, stronger feedback indicates that the virtual user is closer.

In the Scented Stool, the speaker vibrations are transmitted through the chair’s plate, causing ripples in a small water container above it. The diffusion of the scent placed on the water surface changes according to vibration strength, the higher the frequency, the more dynamic the water movement and scent dispersion.

As the distance between users decreases, the intensity of sound, vibration, and scent increases, allowing them to sense each other’s presence through multisensory feedback.

Multi Sensory Experience

Insights.

This project reinterpreted social presence by moving beyond the purely visual representation of out-of-FoV (Field of View) movements, expanding it through multisensory feedback using airflow, vibration, and scent. The design integrates visual, auditory, tactile, and olfactory cues that complement one another, allowing users to intuitively and sensorially perceive the presence of others beyond their sight.

Through this process, I learned that the essence of sensory technology lies not in complex implementation but in the balance and synchronization between senses. It also deepened my understanding of how non-visual senses enhance immersion and emotional engagement in virtual environments.

However, aspects such as the intensity, timing, and cognitive load of sensory feedback remain to be quantitatively verified. In future development, I aim to evolve this work into a personalized multisensory feedback system and extend it toward multi-user interaction design for richer social experiences.

Achievements.

2025 SIGGRAPH IMMERSIVE PAVILLION